In December 2016 Edgar Maddison Welch walked into a popular pizza restaurant in Washington DC and fired three shots from an AR-15-style rifle. Welch later said that he was trying to save children locked in the basement that were being abused by powerful Democrats, including Hillary Clinton.

The shooting, known as pizzagate, became a warning for how disinformation online can spread and lead to violence. In 2020 an IPSOS poll found that 17 per cent of Americans believed that a group of Satan-worshipping elites who run a child trafficking sex ring were trying to control our politics and media.

From reports that Palestinian journalist Shireen Abu Akleh was killed by a Palestinian, to tweets that suggested former Labour leader Jeremy Corbyn was a terrorist sympathiser, many false perceptions have spread online and are widely believed, says Associate Professor of Middle East Studies and Digital Humanities Marc Owen Jones.

The spread of fake news and disinformation has been weaponised by states including Saudi Arabia, a digital superpower which has used social media to drown out criticism of its foreign policy objectives, including the war on Yemen and the killing of Jamal Khashoggi.

"These Saudi disinformation campaigns, spread frequently by bots and trolls, are aimed domestically, regionally and internationally," Jones tells MEMO. "Domestically they are designed to dilute critical conversations and silence potential critics."

"Regionally they are aimed at targeting foreign institutions they see as unfriendly or critical – journalists or news channels. Internationally they are aimed at dissidents or figures seen as critical of the government – such as Jeff Bezos."

READ: Jindires, NW Syria: 'We can't even find tents to shelter us'

One of the Saudi government's targets are women who criticise Crown Prince Mohammed Bin Salamn, known as MBS, writes Jones in his book, Digital Authoritarianism in the Middle East. One of the most sustained of these was the 2020 assault on Al Jazeera anchor Ghada Oueiss in which photos were stolen from her phone and doctored to make it look like she was nude in a hot tub.

The images received tens of thousands of tweets from accounts with images of MBS and the Saudi flag. Eight months later, Oueiss filed a lawsuit against Bin Salman and several other officials which said that she had been targeted due to her critical coverage of human rights abuses in the Gulf state.

The attack came as online misogyny had long been a growing threat, with so-called social media influencers like Andrew Tate telling millions of followers online that women belong in the home and are a man's property.

Leaders who endorse misogynistic ideas simply normalise and legitimise the behaviour of other influencers. They feed off each other in a mutually reinforcing strategy that creates a space for violent and misogynistic speech.

Jones tells MEMO.

"Many feel that because they have latent feelings that are shared by other influential figures, that those feelings are acceptable or to be encouraged, when in fact they are deeply problematic. Misogyny has always existed; it just appears to be becoming more of a movement."

READ: 'I tried to give a voice to those who live on the margins in Algeria'

For two decades, social media has grown and evolved and is now used by companies on a global scale. They have expanded, says Jones, under the guise that they are spreading freedom. Yet at the same time hate speech is growing on Twitter.

"Now they have created a massive system that cannot be effectively controlled with reducing its reach, which would be a problem for their revenue. They rely on AI systems, instead of human moderators, to regulate content – a very imperfect system."

"Their investment in different languages is patchy, and above all, they sell engagement. Their incentives to regulate hate speech are political and depend on who is doing the speech. The will may exist, but it is not a priority, and the ability of having 'easy access social media' works in contrast to a regulated platform that is attentive to myriad global forms of hate speech."

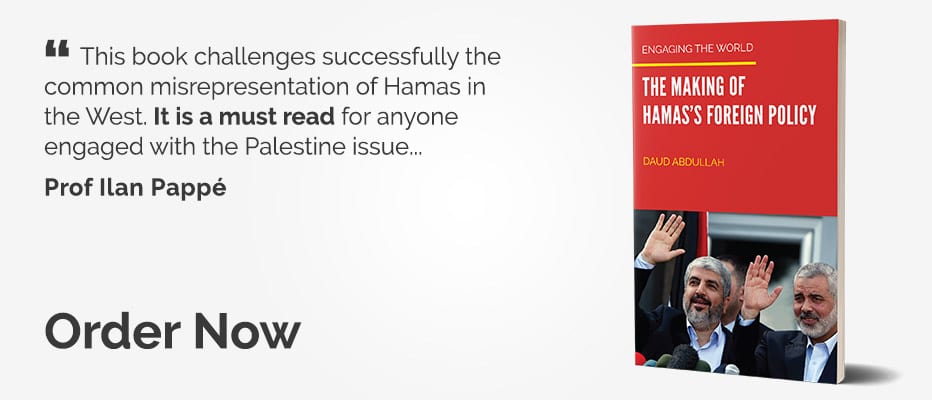

In 2022, tech billionaire Elon Musk bought Twitter for $44 billion leaving critics wondering what will happen to trolls, misogynistic profiles and state co-ordinated disinformation.

"It will get worse," says Jones. "Musk is already monetising access to Twitter's API, making it harder for researchers to hold Twitter accountable. The paid model means bad actors can algorithmically promote their content by subscribing to Twitter Blue."

"The mass sackings of teams responsible for addressing safety and disinformation mean less capacity to tackle bots and trolls. In short, Twitter will become a more potent hate speech and disinformation delivery system – with more Musk content."

![In this photo illustration, the image of Elon Musk is displayed on a computer screen and the logo of twitter on a mobile phone [Muhammed Selim Korkutata - Anadolu Agency]](jpg/aa-20220426-27677626-27677618-elon_musk_twitterc032.jpg)

![Amelia Smith seen at Middle East Monitor's 'Jerusalem: Legalising the Occupation' conference in London, UK on 3 March, 2018 [Jehan Alfarra/Middle East Monitor]](jpg/104a09569b4b.jpg)